Creativity and intuition are definitely desired qualities in a marketer, but how do you know if your campaigns are actually working? By split testing! Split tests or A/B tests (more on the difference between the two later) show you exactly what aspects of your design and copy increase conversions, and which ones require some tweaking or changing.

It is possible, however, to make incorrect assumptions about what your audience prefers or what makes them click if your split tests aren’t performed carefully. In this guide, we break down exactly what split testing is, and how it’s done step by step.

What is a split test?

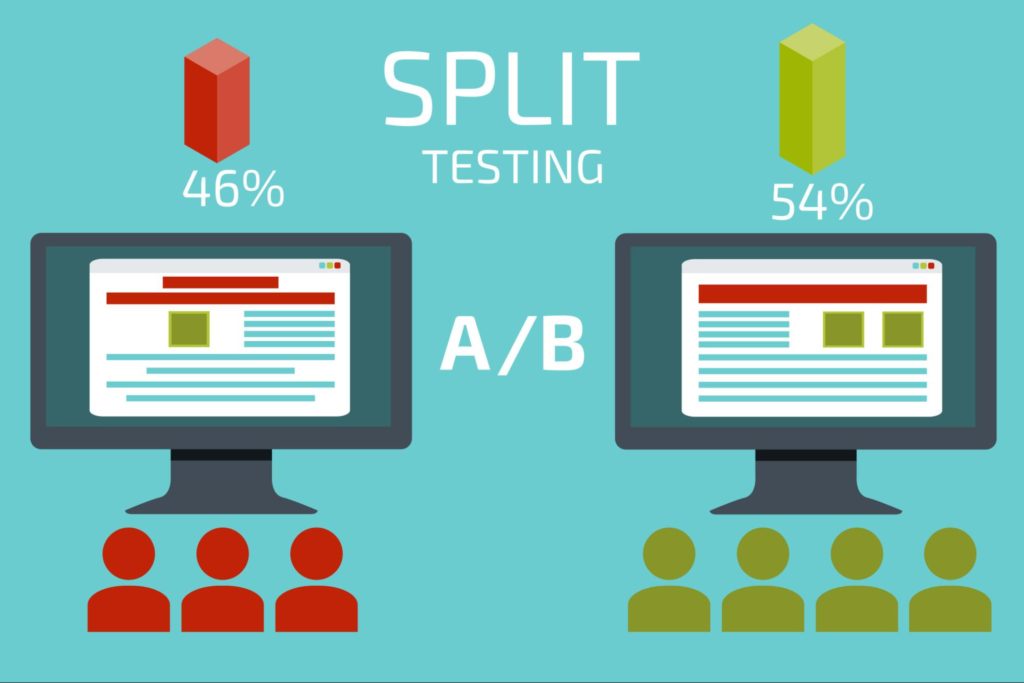

A split test is a method of testing multiple versions of a webpage – such as a landing page, ad, or emails – against each other to understand which one performs better. And by ‘performs better’ we mean, leads to a higher conversion rate. A conversion could be any action you want people to complete, like clicking your ad, signing up for your newsletter, or downloading your app.

In other words, you create two different versions of one piece of content, with changes to one single element. Let’s say, for example, you want to check whether a certain CTA button would perform better at the top of a landing page instead of the sidebar. To test this theory, you create two pages that are identical, except for the CTA placement. Then, you’d show these two versions to two similarly sized audiences and analyze which one was more successful over a specific period of time.

A couple of terms you should know before we continue:

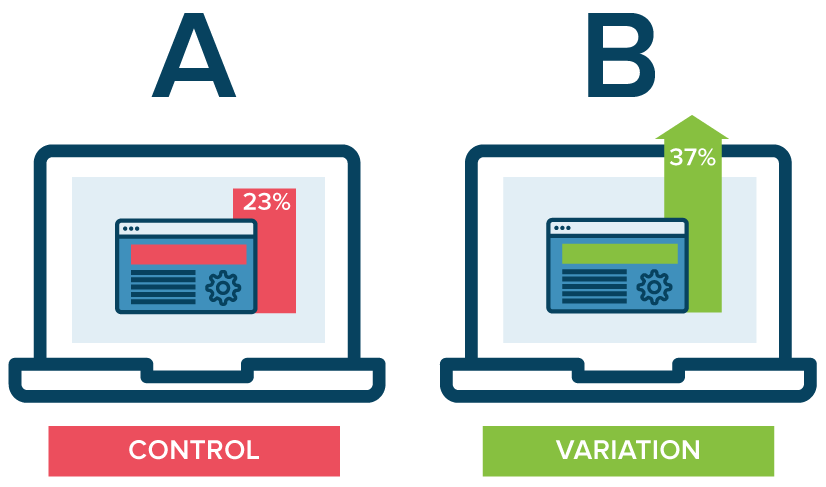

- Control – The original version of whatever you’re testing. In the example we used, that would be the landing page with the CTA button on the sidebar.

- Variant – The changed version of your control.

What’s the difference between split tests, A/B tests, and multivariate tests?

Essentially, a split test compares three or more versions of a web page while keeping all but one variable consistent. An A/B test compares just two versions of the website with one variation. However, most marketers tend to use these terms interchangeably.

When you test multiple variables rather than a single one, it is called multivariate testing, and it’s a slightly different process. Once a site has received enough traffic, you can use multivariant testing to measure how different design combinations affect the overall goal. It is generally recommended just for sites that have a substantial amount of daily traffic.

Why you should run split Tests

Split testing has a multitude of benefits to a marketing team. Above all, these tests are valuable because they are low cost, not complex, and provide a high reward. When you use the right tools, split testing allows you to have considerable control over most external factors, making the results highly reliable.

Unlike multivariate testing, split testing doesn’t require many hard skills and the method can be learned fairly quickly with the help of an intuitive tool.

How to Conduct a Split Test

1. Identify your goal

Although you measure several metrics at any given test, choose a primary metric to focus on before you start the test. Collect all the relevant data to understand the current performance of the page you want to test. Form a hypothesis about why you see these particular results and how to improve them. The template should be:

- Issue

- Proposed solution

- Success metrics

For example, the issue is not enough people are clicking the ‘subscribe’ button on the opt-in form of your e-mail newsletter. The solution you propose is changing the copy of the CTA button. You’ll know it works when you get a 5% increase in sign-up in the next 14 days.

If you don’t define clearly which metrics are important to you and how the changes you propose might affect user behavior, the test might not be effective.

2. Pick one variable to test

There are many variables that might affect bounces, conversions, and overall user behavior. Layout, copy, email subject lines, colors and the list goes on. To evaluate how effective a change is, you need to isolate one ‘independent variable’ and measure its performance. Otherwise, you can’t pinpoint which variable was responsible for the improvement.

It is possible to test more than one variable for a single web page or email, just be sure you’re testing them one at a time.

3. Create a ‘control’ and a ‘variant’

After you’ve defined your goal, your primary metric, and the variable you want to test, it’s time to create the two versions of your test subject. As we said, the ‘control’ is the original version of your webpage or email, and the ‘variant’ is the altered version that will run against it.

4. Calculate your sample size and timing

In order to get reliable results, you need to run your split test long enough and with the right sample size. How you determine your sample size depends on the type of A/B test you’re running and the testing tool you’re using.

An A/B test you run on an email can only be sent to a finite audience, and because a newsletter is sent fairly frequently you don’t have the privilege of time. So you’ll probably want to send an A/B test to a subset of your list that is large enough to achieve statistically significant results, then the ‘winner’ will be set to the rest of your mailing list.

If you’re testing something that doesn’t have a finite audience, like a landing page, then how long you keep your test running is directly affected by your sample size. You’ll need to keep the test running long enough to obtain a substantial number of views. The lower your traffic, the longer the test needs to stay active.

5. Choose a testing tool

When choosing a testing tool, there are a couple of features and functions that you must consider. Make sure your tool of choice allows you to do the following:

- Set up tests without developer help.

- Run multiple tests in parallel but on isolated audiences, to ensure the different test results aren’t influenced y one another.

- Easily create hypotheses and test versions using its built-in features.

- Speedily access accurate results to see whether or not your hypothesis is correct.

Luckily, there is a wide array of efficient tools to choose from. Here are some of the most popular ones:

6. Test both variations simultaneously

This may sound obvious, but timing plays a significant role in any marketing campaign’s results. If you were to run your control during May and your variant in June, you will see different results and it will be very hard (near impossible) to determine whether it’s due to the change you’ve made or the different month.

The only exception is if the variable you’re testing is timing itself, like finding the optimal time of day to send out emails.

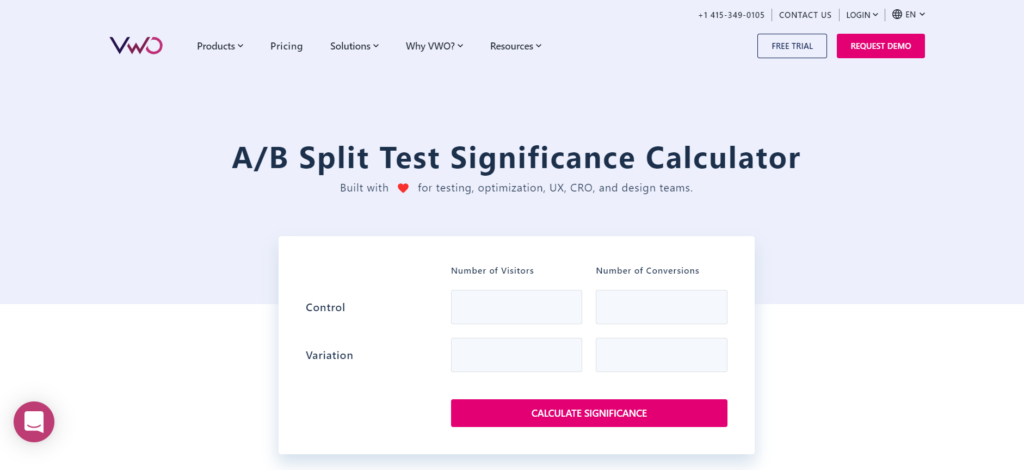

7. Measure the significance of your results

Once you’ve determined which variation performs best according to your primary metric, you need to understand whether your results are statistically significant. In other words, are they enough to justify a change?

There are various calculators that can help you determine the reliability of your data based on visitor numbers and conversions, such as these ones by Optimizely and VWO.

These calculators will work out the confidence level your data produces for the winning variation.

Final Thoughts

Building a successful marketing campaign requires trial and error. And even when something is working, there is always a way to make it work even better. Split testing is a vital CRO practice. It enables marketers to make data-based changes to make sure their websites and related campaigns consistently increase business revenue.

As Neil Patel put it “there is no deep secret to split testing”. Just be consistent. The more you test, the better you will get to know your target audience.